Artificial intelligence (AI) is rapidly becoming a part of our everyday lives. We use AI-powered devices and platforms in almost all areas, but increasingly so in business. As AI becomes more sophisticated, it is important to consider the ethical implications of its use.

Because, let’s be honest, as much as we enjoy testing the limits of Chat GPT and Google Bard, there are key ethical issues related to AI, which we’ll explore in this blog. Specifically, we’ll focus on the rise of generative AI and the potential for it to be biased, unfair, and unsafe. We will also discuss the importance of transparency and accountability in the use of AI systems, and what you can do to mitigate the ethical issues with the technology.

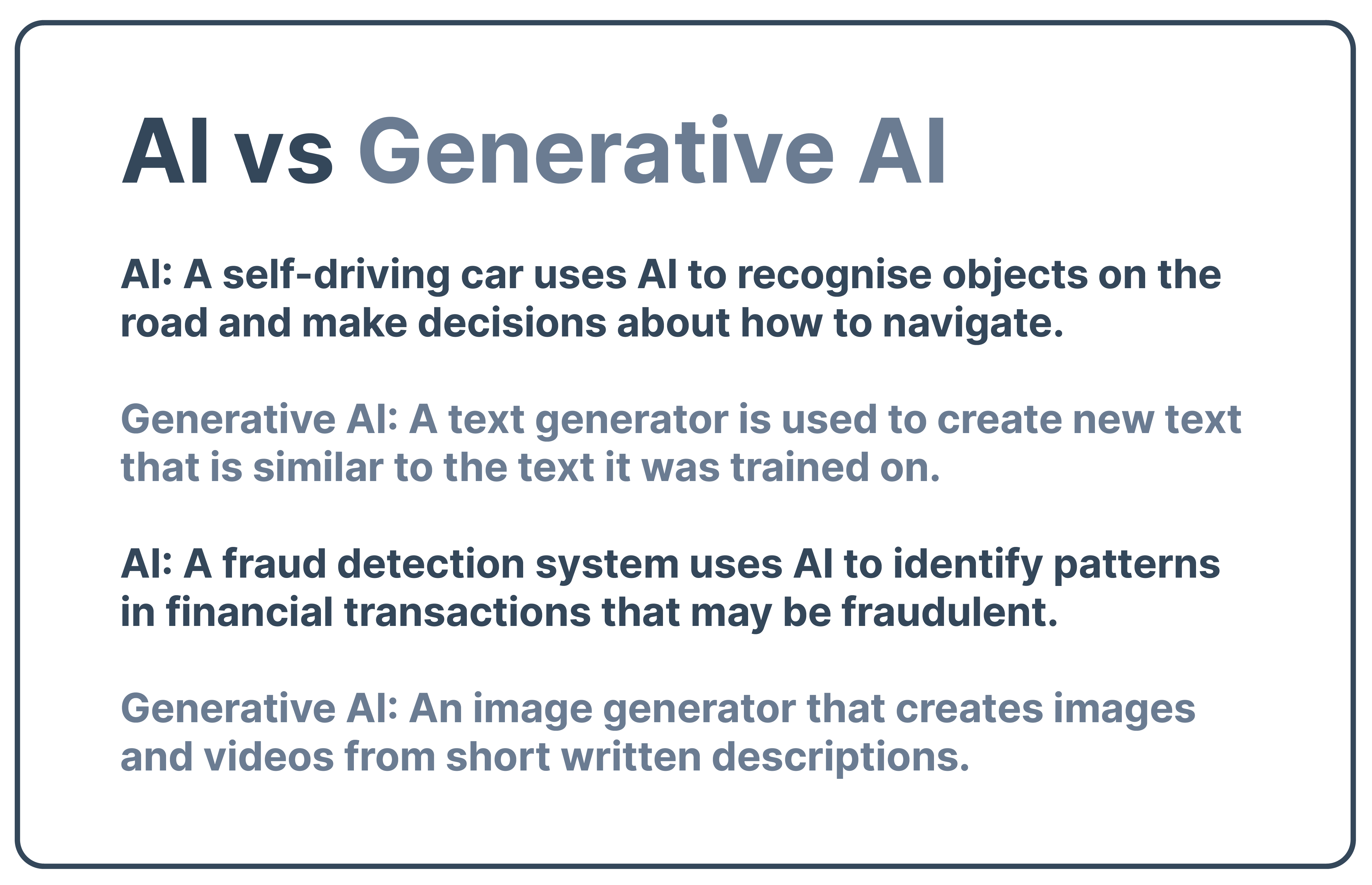

AI vs Generative AI

AI is a broad term that encompasses any technology that can simulate human intelligence. AI systems are used for a huge variety of things from driving a car to translating text to acting as a virtual assistant. It has been around for decades and businesses have been using it for just as long.

Generative AI is a subset of AI that is specifically designed to create - or generate - new content. Generative AI systems can be used to generate text, images, music, and even code. The most famous example of this at the moment would be Chat GPT, but there are scores of other platforms doing this in some way. They are often trained on large datasets of existing content, which they use to learn the patterns and rules to then create content.

Overall, the main difference between AI and generative AI is that AI systems are designed to understand information, while generative AI systems are designed to create information. AI is used to analyse data and make predictions, while generative AI is used to generate new content that is similar to the data they were trained on.

Here are some examples of AI and generative AI in action:

Ethical Issues of Generative AI

As generative AI technology continues to develop, it has the potential to revolutionise the way we create and interact with content. On the other hand, there are some very real ethical issues that come from the general use of generative AI. While some are more serious than others, all deserve consideration from any creator, user, or critic of AI technology.

Deepfakes

One of the most concerning ethical issues surrounding generative AI is the potential for deepfakes and fake content. Deepfakes are visual or audio content that has been manipulated to make it seem as though someone is saying or doing something they never said or did. In general, fake AI content is any type of content that has been fabricated or altered to deceive people.

There have been some viral examples of this fairly recently: Pope Francis in a puffer coat, Mark Zuckerberg’s fake speech on the power of Facebook, Keanu Reeves on TikTok, and so many more.

And while the memes from this sort of output have been pretty amusing, deepfakes and fake content can predictably be used for malicious purposes, such as spreading disinformation, damaging someone's reputation, or even committing fraud. Imagine a video from a prominent politician that calls for violence or audio of your voice used to ask a family member for large sums of money. This doesn’t just have the potential to happen, it is already happening.

Accuracy

Can an AI lie to you? Well, it can certainly provide you with inaccurate information that, despite its inaccuracy, can be compelling and mistaken for the truth. As more and more people in business and beyond turn to AI like Chat GPT and Bard to create content, it’s prescient to remember that these are AI language models. What does that mean?

It means that, while they can create content, that content is not always going to be fact-checked. There are a number of reasons for this:

- The training data is inaccurate. AI language models are trained on massive datasets of text and code. If this data is inaccurate, then the model will learn to generate inaccurate information. For example, if a model is trained on a dataset of news articles that contain factual errors, then the model will be more likely to generate incorrect information.

- The model is not able to distinguish between reality and fiction. This means that if a model is trained on a dataset that contains both factual and fictional information, then the model will be more likely to generate inaccurate information.

- The model is not able to understand the context of a question. This means that if a model is asked a question that is ambiguous or that requires an understanding of the real world, then the model may generate inaccurate information.

- The model is not able to be updated with new information. AI language models are static models. This means that once a model is trained, it cannot be updated with new information. This can lead to problems if the model is trained on data that is outdated or that contains inaccurate information.

In fact, according to TruthfulQA’s benchmark test, generative AI models are only completely truthful 25% of the time.

While Chat GPT provides its users with a disclaimer, people often overlook these warnings and report what’s generated as fact, which can be dangerous.

Copyright and theft of work

One major issue that we are seeing more of with generative AI is a threat to copyright. This is because of how generative AI works - it pulls from already existing content to create new content. AI tools are trained on massive datasets of existing works, and they can learn to reproduce the style and content of these with great accuracy. In fact, that’s generally the selling point. Inevitably, these can be substantially similar to (or in some cases, indistinguishable from) the existing works it is pulling from, most times without the permission of the original creator.

The result can have a major impact on the revenue for artists, not to mention their reputation. We are seeing backlash to these types of platforms, including lawsuits that claim these AI platforms are outright stealing from artists, a violation of copyright law.

Beyond legal recourse, there doesn’t seem to be much artists can do beyond things like watermarking their work and manually scanning the internet for unauthorised copies of their work. Of course, the law is still evolving, but it is important for any users of AI who want to stay within ethical bounds to be aware of this issue and try to mitigate as best they can.

Data Privacy

Data privacy is also an area of concern for generative AI. Language models like chat GPT and Google Bard are trained on data that is sometimes personally identifiable. This can come about from something as simple as a directional prompt in the language model to help it create content that is specific to your situation or company.

Why is this a problem? Any information you provide to language models like Chat GPT is retained by the platform and used to train it. Meaning anything you put into it could potentially be used to help other users.

Samsung employees found this out the hard way. Employees of its semiconductor division used Chat GPT to help with some coding issues. To get the results they wanted, the employees entered top-secret source code and internal meeting notes. Three similar incidents were reported on Chat GPT in the span of a few months after it became widely used by the public.

With these platforms, it can actually be really hard to request the removal of data, meaning these leaks can be out there for the public to find in perpetuity. So, while language models can be a useful tool for companies to streamline their content creation and processes, don’t forget that the data that is provided is no longer yours to control.

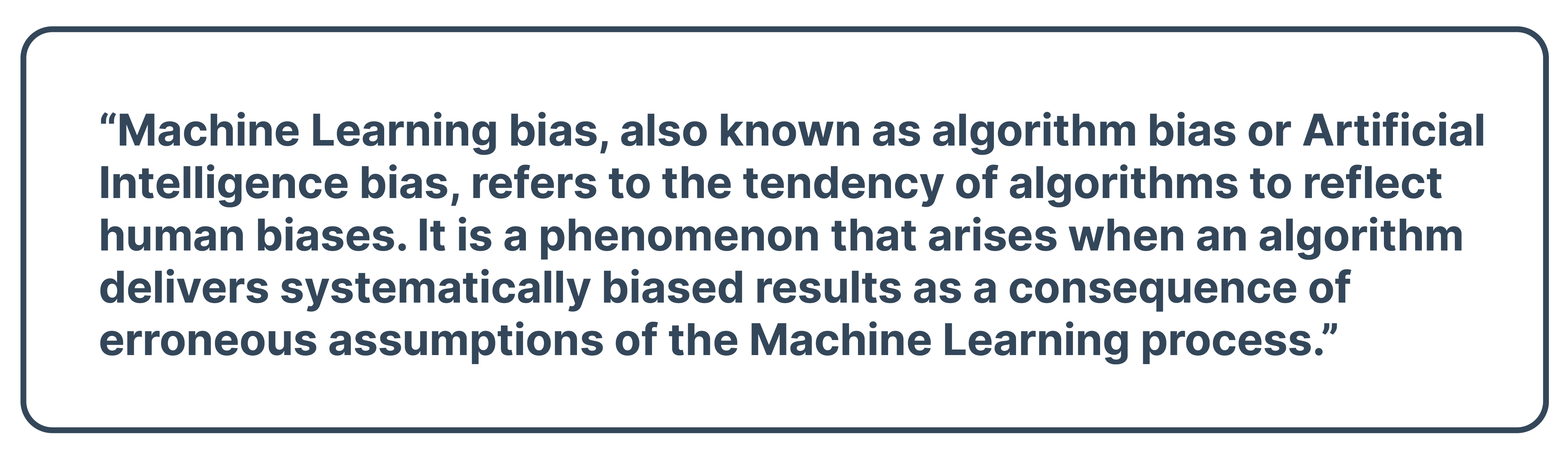

Bias

According to Levity,

These biases are nothing new, but as AI evolves, there is a real danger of harmful biases becoming part of its core processes. What does this look like in practice? Here are a few examples:

- In 2014, Amazon built an AI recruitment program to review the resumes of job applicants. It wasn’t until 2015 they realised that the system discriminated against women for technical roles.

- COMPAS is a risk assessment tool that was used to predict the likelihood of recidivism in the United States. In 2016, ProPublica investigated COMPAS and found that the system was more likely to predict that black defendants were more at risk of reoffending than white defendants. This finding raised concerns about the potential for bias in COMPAS.

- In 2016, Microsoft launched Tay, a Twitter bot experiment. Within 24 hours it had to be taken offline for sharing racist, homophobic, transphobic, sexist, and violent materials.

These examples are largely traditional AI, but as we’ve learned, generative AI uses the available datasets to create new content. If the content it is accessing is biased, then the content it created will also contain those biases. And the cycle will continue indefinitely, putting many communities at genuine risk.

Job Displacement

In 1930, John Maynard Keynes, who is regarded as the founder of modern macroeconomics, predicted that by 2030 people would work only 15 hours per week, largely as a result of technological advancement. That has yet to manifest but Ai is still having an impact on employment. In fact, a recent Goldman Sachs study found that generative AI could impact 300 million full-time jobs globally, leading to a significant disruption of the job market.

Generative AI could impact 300 million full-time jobs globally.

While there is no need to panic (yet), there are predictions that AI will replace large swathes of the workforce, without providing meaningful replacement careers.

Here are some of the ways that generative AI could disrupt the job market:

- Automation of tasks. Generative AI can be used to automate a wide range of tasks, including writing, translation, customer service, and even medical diagnosis, potentially leading to job losses in these industries.

- Creation of new jobs. Generative AI could also create new jobs, such as AI engineers, data scientists, and content creators.

- Changes in the skill set required for jobs. Generative AI will require new skills and knowledge from workers. For example, workers will need to be able to understand how generative AI works and how to use it effectively.

- More profit. Generative AI could lead to increased profit for those who own and control generative AI technology. If not paired with a similar increase in wage for those working on and with the technology, this could lead to global issues.

Source: Bloomberg

How to Practice Responsible AI Usage

This all seems scary and apocalyptic, but AI is a tool like any other that can be harnessed for good as well. The key is to be aware of the ethical implications and act in a deliberate manner to deal with them.

The United Nations Educational, Scientific and Cultural Organization (UNESCO), a specialised agency of the United Nations aimed at promoting world peace and security through international cooperation in education, arts, sciences and culture, provides 10 recommendations for a “human-centric” approach to ethics of AI, which are a great place to start.

- Proportionality and Do No Harm

The use of AI systems must not go beyond what is necessary to achieve a legitimate aim. Risk assessment should be used to prevent harms which may result from such uses.

- Safety and Security

Unwanted harms (safety risks) as well as vulnerabilities to attack (security risks) should be avoided and addressed by AI actors.

- Right to Privacy and Data Protection

Privacy must be protected and promoted throughout the AI lifecycle. Adequate data protection frameworks should also be established.

- Multi-stakeholder and Adaptive Governance & Collaboration

International law & national sovereignty must be respected in the use of data. Additionally, the participation of diverse stakeholders is necessary for inclusive approaches to AI governance.

- Responsibility and Accountability

AI systems should be auditable and traceable. There should be oversight, impact assessment, audit and due diligence mechanisms in place to avoid conflicts with human rights norms and threats to environmental well-being.

- Transparency and Explainability

The ethical deployment of AI systems depends on their transparency & explainability (T&E). The level of T&E should be appropriate to the context, as there may be tensions between T&E and other principles such as privacy, safety and security.

- Human Oversight and Determination

Member States should ensure that AI systems do not displace ultimate human responsibility and accountability.

- Sustainability

AI technologies should be assessed against their impacts on ‘sustainability’, understood as a set of constantly evolving goals including those set out in the UN’s Sustainable Development Goals.

- Awareness & Literacy

Public understanding of AI and data should be promoted through open & accessible education, civic engagement, digital skills & AI ethics training, media & information literacy.

- Fairness and Non-Discrimation

AI actors should promote social justice, fairness, and non-discrimination while taking an inclusive approach to ensure AI’s benefits are accessible to all.

With these in mind, here are a few things businesses and individuals can do to ensure AI is used and developed ethically:

- Learn about AI ethics. There are a number of resources available to help you learn about AI ethics. You can read articles, watch videos, and attend workshops

- Create AI policies in your business. Ensure that any employee knows exactly what is and isn’t acceptable, and ensure you’re putting “people first”.

- Support organisations that are working on to promote ethical AI. You can support these organisations by donating money, volunteering your time, or spreading the word about their work.

By taking these steps, you can help to ensure that AI is used for good and not for harm.

Implementing a successful AI strategy relies on analysing vast amounts of data. Try Hurree today for free, and see for yourself how you can use our dashboards to display all the data you need.

Share this

You May Also Like

These Related Stories

[Infographic] Big Data Ethics

The Ethics of Big Data